The Office for Learning and Teaching (OLT) is – now was – an Australian government body intended to support best practice in enhancing teaching and learning in the Higher Education sector.

It funds a number of research projects, which in 2013 included “What works and why? Understanding successful technology enhanced learning within institutional contexts” – driven by Monash University in Victoria and Griffith University in Queensland and led by Neil Selwyn.

The final report for this project has now been published.

They also have a project website running at http://bit.ly/TELwhatworksandwhy

Rather than focussing on the “state of the art”, the project focuses on the “state of the actual” – the current implementations of TELT practices in universities that are having some measure of success. It might not be the most inspiring list (more on that shortly) but it is valuable to have a snapshot of where we are, what educators and students value and the key issues that the executive face (or think they face) in pursuing further innovation.

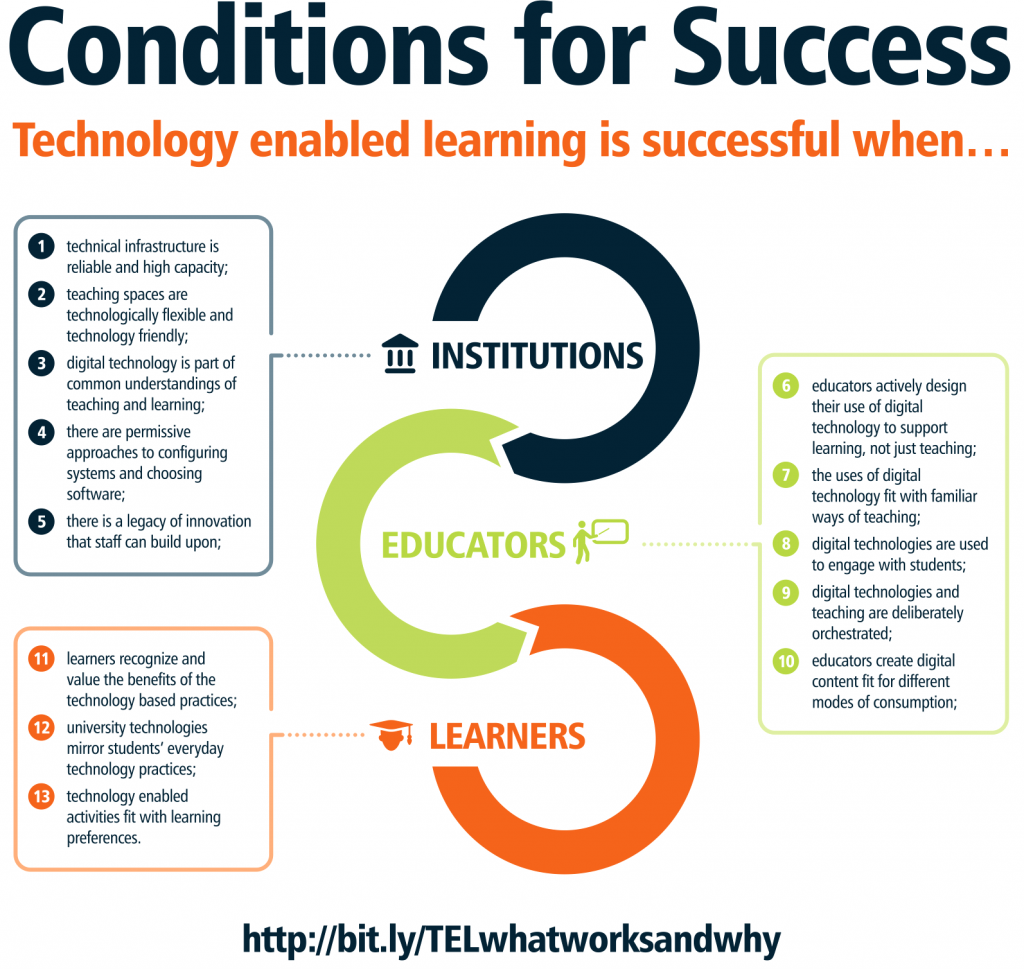

The report identifies 13 conditions for successful use of Tech Enhanced Learning in the institution and with teachers and students. (Strictly speaking, they call it technology enabled learning, which grates with me far more than I might’ve expected – yes, it’s ultimately semantics but for me the implication is that the learning couldn’t occur without the tech and that seems untrue. So because this is my blog, I’m going to take the liberty of using enhanced)

The authors took a measured approach to the research, beginning with a large scale survey of teacher and student attitudes toward TEL which offered a set of data that informed questions in a number of focus groups. This then helped to identify a set of 10 instances of “promising practice” at the two participating universities that were explored in case studies. The final phase involved interviewing senior management at the 39 Australian universities to get feedback on the practicality of implementing/realising the conditions of success.

So far, so good. The authors make the point that the majority of research in the TELT field relates to more cutting edge uses in relatively specific cohorts and while this can be enlightening and exciting, it can overlook the practical realities of implementing these at scale within a university learning ecosystem. As a learning technologist, this is where I live.

What did they discover?

The most prominent ways in which digital technologies were perceived as ‘working’ for students related to the logistics of university study. These practices and activities included:

- Organising schedules and fulfilling course requirements;

- Time management and time-saving; and

- Being able to engage with university studies on a ‘remote’ and/or mobile basis

One of the most prominent learning-related practices directly to learning was using digital technologies to ‘research information’; ‘Reviewing, replaying and revising’ digital learning content (most notably accessing lecture materials and recordings) was also reported at relatively high levels.

Why technologies ‘work’ – staff perspectives

Most frequently nominated ways in which staff perceived digital technologies were ‘working’ related to the logistics of university teaching and learning. These included being able to co-ordinate students, resources and interactions in one centralised place. This reveals a frequently encountered ‘reality’ of digital technologies in this project: technologies are currently perceived by staff and students to have a large, if not primary role to enable the act of being a teacher or student, rather than enabling the learning.

Nevertheless, the staff survey did demonstrate that technologies were valued as a way to provide support learning, including delivering instructional content and information to students in accessible and differentiated forms. This was seen to support ‘visual’ learning, and benefit students who wanted to access content at different times and/or different places.

So in broad terms, I’d suggest that technology in higher ed is seen pretty much exactly the same way we treat most technology – it doesn’t change our lives so much as help us to live them.

To extrapolate from that then, when we do want to implement new tools and ways of learning and teaching with technology, it is vital to make it clear to students and teachers exactly how they will benefit from it as part of the process of getting them on board. We can mandate the use of tools and people will grumblingly accept it but it is only when they value it that they will use it willingly and look for ways to improve their activities (and the tool itself).

The next phase of the research, looking at identified examples of ‘promising practice” to develop the “conditions for success” is a logical progression but looking at some of the practices used, it feels like the project was aiming too low. (And I appreciate that it is a low-hanging-fruit / quick-wins kind of project and people in my sphere are by our natures more excited by the next big thing but all the same, if we’re going to be satisfied with the bare minimum, will that stunt our growth?) . In fairness, the report explicitly says “the cases were not chosen according to the most ‘interesting’, ‘innovative’ or ‘cutting-edge’ examples of technology use, but rather were chosen to demonstrate sustainable examples of TEL”

Some of the practices identified are things that I’ve gradually been pushing in my own university so naturally I think they’re top shelf innovations 🙂 – things like live polling in lectures, flipping the classroom, 3D printing and virtual simulations. Others however included the use of online forums, providing videos as supplementary material and using “online learning tools” – aka an LMS. For the final three, I’m not sure how they aren’t just considering standard parts of teaching and learning rather than something promising. (But really, it’s a small quibble I guess and I’ll move on)

The third stage asked senior management to rank the usefulness of the conditions of success that were identified from the case studies and to comment on how soon their universities would likely be in a position to demonstrate them. The authors seemed surprised by some of the responses – notably to the resistance to the idea of taking “permissive approaches to configuring systems and choosing software”. As someone “on the ground” that bumps into these kinds of questions on daily basis, this is where it became clear to me that the researchers have still been looking at this issue from a distance and with a slightly more theoretical mindset. There is no clear indication anywhere in this paper that they discussed this research with professional staff (i.e. education designers or learning technologists) who are often at the nexus of all of these kinds of issues. Trying to filter out my ‘professional hurt feelings’, it still seems a lot like a missed opportunity.

No, wait, I did just notice in the recommendations that “central university agencies” could take more responsibility for encouraging a more positive culture related to TEL among teachers.

Yup.

Moving on, I scribbled a bunch of random notes and thoughts over this report as I read it (active reading) and I might just share these in no particular order.

- Educators is a good word. (I’m currently struggling with teachers vs lecturers vs academics)

- How do we define how technologies are being used “successfully and effectively”?

- Ed Tech largely being used to enrich rather than change

- Condition of success 7″the uses of digital technology fit with familiar ways of teaching” – scaffolded teaching

- condition of success 10 “educators create digital content fit for different modes of consumption” – great but it’s still an extra workload and skill set

- dominant institutional concerns include “satisfying a perceived need for innovation that precludes more obvious or familiar ways of engaging in TEL” – no idea how we get around the need for ‘visionaries’ at the top of the tree to have big announceables that seemingly come from nowhere. Give me a good listener any day.

- for learners to succeed with ed tech they need better digital skills (anyone who mentions digital natives automatically loses 10 points) – how do we embed this? What are the rates of voluntary uptake of existing study skills training?

- We need to normalise new practices but innovators/early adopters should still be rewarded and recognised

- it’s funny how quickly ed tech papers date – excitement about podcasts (which still have a place) makes this feel ancient

- How can we best sell new practices and ideas to academics and executive? Showcases or 5 min, magazine show style video clips (like Beyond 2000 – oh I’m so old)

- Stats about which tools students find useful – data is frustratingly simple. Highest rating tool is “supplementing lectures, tutorials, practicals and labs” with “additional resources” at 42% (So 58% don’t find useful? – hardly a ringing endorsement

- Tools that students were polled about were all online tools – except e-books. Where do offline tools sit?

- Why are students so much more comfortable using Facebook for communication and collaboration than the LMS?

- 60% of students still using shared/provided computers over BYOD. (Be interesting to see what the figure is now)

- Promising practice – “Illustrating the problem: digital annotation tools in large classes” – vs writing on the board?

- conditions for success don’t acknowledge policy or legal compliance issues (privacy, IP and copyright)

- conditions for success assume students are digitally literate

- there’s nothing in here about training

- unis are ok with failure in research but not teaching

- calling practices “innovations signals them as non-standard or exceptions” – good point. Easier to ignore them

- nothing in here about whether technology is fit for purpose

Ultimately I got a lot out of this report and will use it to spark further discussion in my own work. I think there are definitely gaps and this is great for me because it offers some direction for my own research – most particularly in the role of educational support staff and factors beyond the institution/educator/student that come into play.

Update: 18/4/16 – Dr Michael Henderson of Monash got in touch to thank me for the in-depth look at the report and to also clarify that “we did indeed interview and survey teaching staff and professional staff, including faculty based and central educational / instructional designers”

Which kind of makes sense in a study of this scale – certainly easy enough to pare back elements when you’re trying to create a compelling narrative in a final report I’m sure.

Pingback: Thoughts on: “Digital is not the future – Hacking the institution from the inside” – how education support staff can bring change | Screenface