One of the best things about my research and being part of a community of practice of education technologists/designers is that there are a lot of overlaps. While I’d hate to jump the gun, I think it’s pretty safe to say that harnessing the group wisdom of this community is going to be a core recommendation when it comes to looking for ways to better support Tech Enhanced Learning and Teaching practices in higher education.

So why not start now?

There’s a lively and active community of Ed Techs online (as you’d hope) and arguably we’re bad at our jobs if we’re not part of it. I saw an online “hack” event mentioned a couple of weeks ago – the “Digital is not the future – Hacking the institution from the inside” discussion/workshop/something and joined up.

There’s a homepage (that I only just discovered) and a Twitter hashtag #futurehappens (that I also wish I’d noticed) and then a Loomio discussion forum thing that most of the online action has been occurring in.

Just a side note on Loomio as a tool – some of the advanced functionality (voting stuff) seems promising but the basics are a little poor. Following a discussion thread is quite difficult when you get separate displays for new posts that only include most of the topic heading and don’t preview the new response. (Either on screen or in the email digest). Biographical information about participants was also scant.

All the same, the discussions muddled along and there were some very interesting and thoughtful chats about a range of issues that I’ll hopefully do justice to here.

It’s a joint event organised by the London School of Economics (LSE) and the University of Arts, London (UAL) but open to all. Unsurprisingly then, most participants seem to be from the UK, so discussions were a little staggered. There was also an f2f event that generated a sudden surge of slightly decontextualised posts but there was still quite of bit of interest to come from that (for an outsider)

The “hack” – I have to use inverted commas because I feel silly using the term with a straight face but all power to the organisers and it’s their baby – was intended to examine the common issues Higher Ed institutions face in innovating teaching and learning practices, with a specific focus on technology.

The guiding principles are:

Rule 1: We are teaching and learning focused *and* institutionally committed

Rule 2: What we talk about here is institutionally/nationally agnostic

Rule 3: You are in the room with the decision makers. What we decide is critical to the future of our institutions. You are the institution

Rule 4: Despite the chatter, all the tech ‘works’ – the digital is here, we are digital institutions. Digital is not the innovation.

Rule 5: We are here to build not smash

Rule 6: You moan (rehearse systemic reasons why you can’t effect change – see Rule 3), you get no beer (wine, juice, love, peace, etc)We have chosen 5 common scenarios which are often the catalyst for change in institutions. As we noted above, you are in the room with the new VC and you have 100 words in each of the scenarios below to effectively position what we do as a core part of the institution. Why is this going to make our institutional more successful/deliver the objectives/save my (the VCs) job? How do we demonstrate what we do will position the organisation effectively? How do we make sure we stay in the conversation and not be relegated to simply providing services aligned with other people’s strategies?

The scenarios on offer are below – they seemed to fall by the wayside fairly quickly as the conversation evolved but they did spark a range of different discussions.

Scenario 1

Strategic review of the institution and budget planning for 2020

Scenario 2

Institutional restructure because of a new VC

Scenario 3

Undertaking of an institution wide pedagogical redesign

Scenario 4

Responding to and implementing TEF

Scenario 5

Institutional response to poor NSS/student experience results

(It was assumed knowledge that TEF is the upcoming UK govt Teaching Excellence Framework – new quality standards – and the NSS is the National Student Survey – student satisfaction feedback largely.)

Discussions centred around what we as Ed. Designers/Techs need to do to “change the discourse and empower people like us to actively shape teaching and learning at our institutions”. Apart from the ubiquitous “more time and more money” issue that HE executives hear from everyone, several common themes emerged across the scenarios and other posts. Thoughts about university culture, our role as experts and technology consistently came to the fore. Within these could be found specific issues that need to be addressed and a number of practical (and impractical) solutions that are either in train or worth considering.

On top of this, I found a few questions worthy of further investigation as well as broader topics to pursue in my own PhD research.

I’m going to split this into a few posts because there was a lot of ground covered. This post will look at some of the common issues that were identified and the from there I will expand on some of the practical solutions that are being implemented or considered, additional questions that this event raised for me and a few other random ideas that it sparked.

Issues identified

There was broad consensus that we are in the midst of a period of potentially profound change in education due to the affordances offered by ICT and society’s evolving relationship with information and knowledge creation. As Education designers/technologists/consultants, many of us sit fairly low in the university decision making scheme of things but our day to day contact with lecturers and students (and emerging pedagogy and technology) give us a unique perspective on how things are and how they might/should be.

Ed Tech is being taken up in universities but we commonly see it used to replicate or at best augment long-standing practices in teaching and learning. Maybe this is an acceptable use but it is often a source of frustration to our “tribe” when we see opportunities to do so much more in evolving pedagogy.

Peter Bryant described it as “The problem of potential. The problem of resistance and acceptance” and went on to ask “what are the key messages, tools and strategies that put the digital in the heart of the conversation and not as a freak show, an uncritical duplication of institutional norms or a fringe activity of the tech savvy?”

So the most pressing issue – and the purpose of the hack itself – is what we can do (and how) to support and drive the change needed in our institutions. Change in our approach to the use of technology enhanced learning and teaching practices and perhaps even to our pedagogy itself.

Others disagreed that a pedagogical shift was always the answer. “I’m not sure what is broken about university teaching that needs fixing by improved pedagogy… however the economy, therefore the job market is broken I think… when I think about what my tools can do to support that situation, the answers feel different to the pedagogical lens” (Amber Thomas)

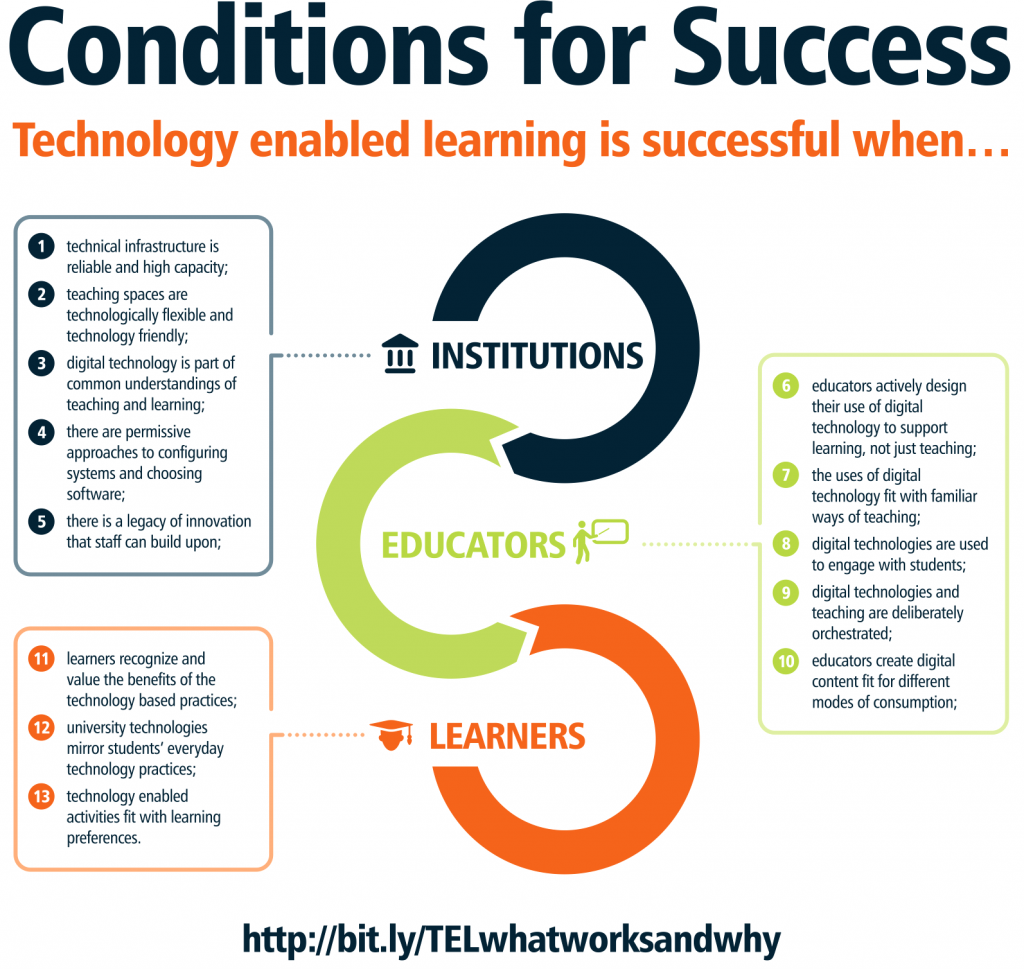

The very nature of how we define pedagogy arose tangentially a number of times – is it purely about practices related to the cognitive and knowledge building aspects of teaching and learning or might we extend it to include the ‘job’ of being a student or teacher? The logistical aspects of studying – accessing data, communication, administrivia and the other things that technology can be particularly helpful in making more convenient. I noted the recent OLT paper – What works and why? – that found that students and teachers valued these kinds of tools highly. Even if these activities aren’t the text-book definition of pedagogy, they are a key aspect of teaching and learning support and I’d argue that we should give them equal consideration.

Several other big-picture issues were identified – none with simple solutions but all things that must be taken into consideration if we hope to be a part of meaningful change.

- The sheer practicality of institution wide change, with the many different needs of the various disciplines necessitates some customised solutions.

- The purpose of universities and university education – tensions between the role of the university in facilitating research to extend human knowledge and the desire of many students to gain the skills and knowledge necessary for a career.

- The very nature of teaching and learning work can and is changing – this needs to be acknowledged and accommodated. New skills are needed to create content and experiences and to make effective use of the host of new tools on offer. Students have changing expectations of access to information and their lecturers’ time, created by the reality of our networked world. These are particularly pointy issues when we consider the rise of casualisation in the employment of tutors and lecturers and the limits on the work that they are paid to do.

Three key themes emerged consistently across all of the discussion posts in terms of how we education support types (we really do need a better umbrella term) can be successful in the process of helping to drive change and innovation in our institutions. Institutional culture, our role as “experts” and technology. I’ll look at culture and expertise for now.

Culture

It was pretty well universally acknowledged that, more than policy or procedure or resourcing, the culture of the institution is at the heart of any successful innovations. Culture itself was a fairly broad set of topics though and these ranged across traditional/entrenched teaching and learning practices, how risk-averse or flexible/adaptable an institution is, how hierarchical it is and to what extent the ‘higher-ups’ are willing to listen to those on the ground, willingness to test assumptions, aspirational goals and strategy and change fatigue.

Some of the ideas and questions to emerge included:

- How do we change the discourse from service (or tech support) to pedagogy?

- “The real issue is that money, trust, support, connectedness and strategy all emanate from the top” (Peter Bryant)

- “the prerequisite for change is an organisational culture that is discursive, open and honest. And there needs to be consensus about the centrality of user experience.” (Rainer Usselmann)

- “We need to review our decision making models and empower agility through more experimentation” (Silke Lange) – My take on this – probably true but perhaps not the right thing to say to the executive presumably, with the implication that they’re currently making poor decisions. Perhaps we can frame this more in terms of a commitment to continuous improvement, then we might be able to remove the sense of judgement about current practices and decision makers?

- “we will reduce the gap between the VC and our students… the VC will engage with students in the design of the organisation so it reflects their needs. This can filter down to encourage students and staff to co-design courses and structures, with two way communication” (Steve Rowett)

- “In the private (start-up) sector, change is all you know. Iterate, pivot or perservere before you run out of money.That is the ‘Lean Start-up’ mantra… create a culture and climate where it is expected and ingrained behaviour then you constantly test assumptions and hypotheses” (Rainer Usselman)

- “Theoretical and practical evidence is important for creating rationale and narratives to justify the strategy” (Peter Bryant) – I agree, we need to use a research-led approach to speak to senior academic execs

While change and continuous improvement is important, in many places it has come to be almost synonymous with management. And not always good management – sometimes just management for the sake of appearing to be doing things. It’s also not just about internal management – changes in government and government policy or discipline practices can all necessitate change.

One poster described how change fatigued lecturers came to respond to an ongoing stream of innovations relating to teaching and learning practice coming down from on-high.

I don’t think anyone will be surprised to hear that staff got very good at re-describing their existing, successful practice in whatever the language of the week was.

Culture is arguably the hardest thing to change in an organisation because there are so many different perspectives of it held by so many different stakeholders. The culture is essentially the philosophy of the place and it will shape the kinds of policy that determine willingness to accept risk, open communication, transparency and reflection – some of the things needed to truly cultivate change.

Experts

Our status (as education designers/technologists/support with expertise) in the institution and the extent to which we are listened to (and heard) was seen as another major factor in our ability to support and drive innovation.

There were two key debates in this theme: how we define/describe ourselves and what we do and how we should best work with academics and the university.

Several people noted the difference between education designers and education technologists.

“Educational developers cannot be ignorant of educational technologies any more than Learning Technologists can be ignorant of basic HE issues (feedback, assessment, teaching practices, course design, curriculum development etc).”

Perhaps it says something about my personal philosophy or the fact that I’ve always worked in small multi-skilled teams but the idea that one might be able to responsibly recommend and support tools without an understanding of teaching and learning practice seems unthinkable. This was part of a much larger discussion of whether we should even be talking in terms of eLearning any more or just trying to normalise it so that it is all just teaching and learning. Valid points were made on both sides

“Any organisational distinction between Learning & Teaching and eLearning / Learning Technology is monstrous. Our goal should be to make eLearning so ubiquitous that as a word it becomes obsolete.” (SonJa Grussendorf)

” I also think that naming something, creating a new category, serves a political end, making it visible in a way that it might not be otherwise.” (Martin Oliver)

“it delineates a work area, an approach, a mindset even…Learning technology is not a separate, secondary consideration, or it shouldn’t be, or most strongly: it shouldn’t be optional.” (Sonja Grussendorf)

There was also an interesting point made that virtually nobody talks about e-commerce any more, it’s just a normal (and sometimes expected) way of doing business now.

For me, the most important thing is the perception of the people that I am working with directly – the lecturers. While there is a core of early adopters and envelope pushers who like to know that they have someone that speaks their language and will entertain their more “out-there” ideas when it comes to ed tech and teaching practices, many more just want to go about the business of teaching with the new learning tools that we have available.

As the Education Innovation Office or as a learning technologist, I’m kind of the helpdesk guy for the LMS and other things. Neither of these sets of people necessarily think of me in terms of broader educational design (that might or might not be enhanced with Ed Tech). So I’ve been thinking a lot lately about rebranding to something more like Education Support or Education Excellence. (I’ve heard whispers of a Teaching Eminence project in the wind – though I haven’t yet been involved in any discussions)

But here’s the kicker – education technology and innovation isn’t just about better teaching and learning. For the college or the university, it’s an opportunity to demonstrate that yes, we are keeping up with the Joneses, we are progressive, we are thought leaders. We do have projects that can be used to excite our prospective students, industry partners, alumni, government and benefactors. So on this level, keeping “innovation” or “technology” in a title is far more important. So, pragmatically, if it can help me to further the work that I’m doing by being connected to the “exciting” parts of university activity, it would seem crazy not to.

There was some debate about whether our role is to push, lead or guide new practice. I think this was largely centred on differences of opinion on approaches to working with academics. As I’ve mentioned, my personal approach is about understanding their specific teaching needs (removing technology from the conversation where possible) and recommending the best solutions (tech and pedagogic). Other people felt that as the local “experts”, we have a responsibility to push new innovations for the good for the organisation.

“Personally I’m not shy about having at least some expertise and if our places of work claim to be educational institutions then we have a right to attempt to make that the case… It’s part of our responsibility to challenge expectations and improve practices as well” (David White)

“we should pitch ‘exposure therapy’ – come up with a whole programme that immerses teaching staff in educational technology, deny them the choice of “I want to do it the old fashioned way” so that they will realise the potential that technologies can have in changing not only, say, the speed and convenience of delivery of materials (dropbox model), but can actually change their teaching practice.” (Sonja Grussendorf)

I do have a handful of personal Ed tech hobby horses that the College hasn’t yet taken an interest in (digital badges, ePortfolios, gamification) that I have advocated with minimal success and I have to concede that I think this is largely because I didn’t link these effectively enough to teaching and learning needs. Other participants held similar views.

Don’t force people to use the lift – convince them of the advantages, or better still let their colleagues convince them. (Andrew Dixon)

A final thought that these discussions triggered in me – though I didn’t particularly raise it on the board – came from the elephant in the room that while we might be the “experts” – or at least have expertise worth heeding – we are here having this discussion because we feel that our advice isn’t always listened to.

Is this possibly due to an academic / professional staff divide? Universities exist for research and teaching and these are the things most highly valued – understandably. Nobody will deny that a university couldn’t function in the day to day without the work of professional staff but perhaps there is a hierarchy/class system in which some opinions inherently carry less weight. Not all opinions, of course – academics will gladly accept the advice of the HR person about their leave allocation or the IT person in setting up their computer – but when we move into the territory of teaching and learning, scholarship if you like, perhaps there is an almost unconscious disconnect occurring.

I don’t say this with any particular judgement and I don’t even know if it’s true – my PhD supervisor was aghast when I suggested it to her – but everyone has unconscious biases and maybe this is one of them.

Just a thought anyway but I’d really like to hear your thoughts on this or anything else that I’ve covered in this post.

Next time, on Screenface.net – the role of technology in shaping change and some practical ideas for action.